CPUs make it look like multiple programs are running simultaneously by scheduling them to alternate execution at short time intervals. It does this by using work queues, interval execution, and alternating times, and the operating system performs context switching to store and recall the state of programs. As the number of programs registered in the work queue increases, the waiting time increases, so priority scheduling is used to manage it efficiently.

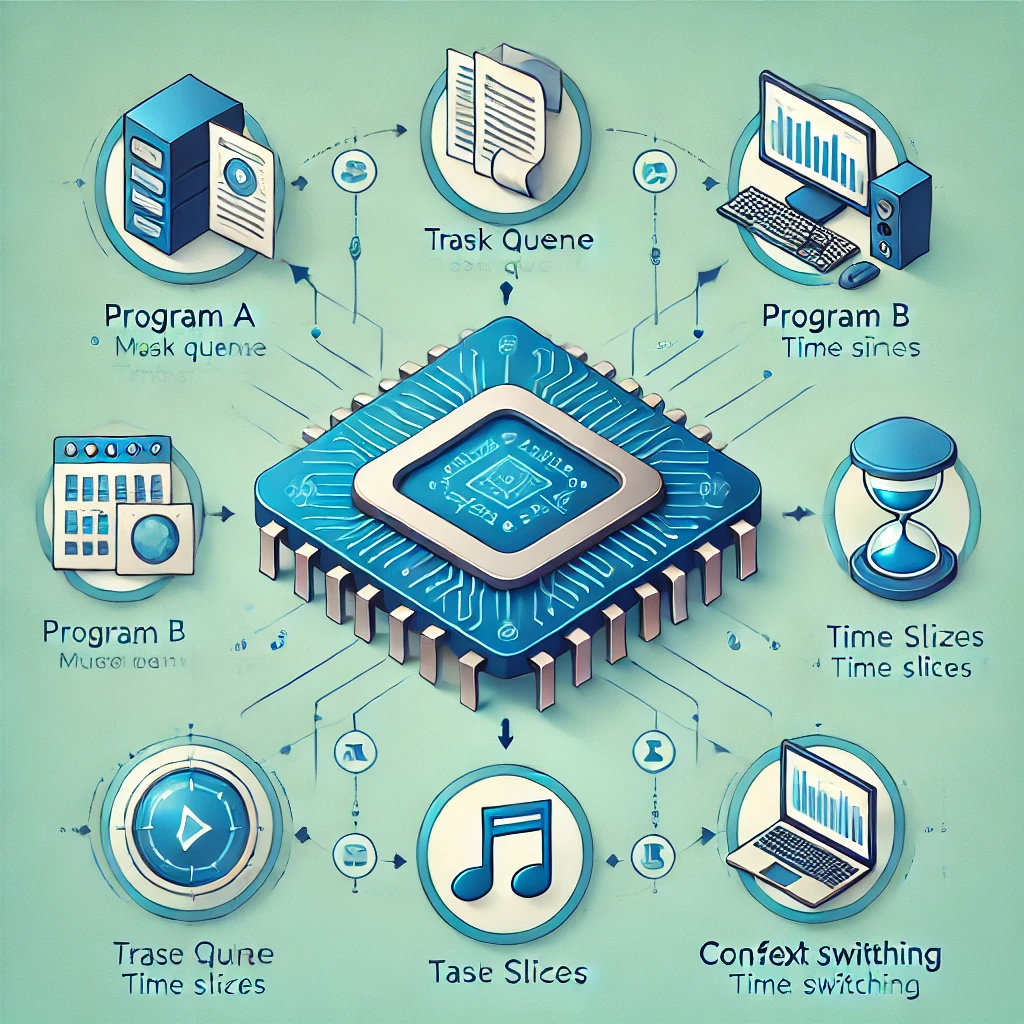

When you’re listening to music and writing a document on your computer, you think you’re running two programs at the same time, but they’re actually alternating between them at very short intervals of time. This is due to central processing unit (CPU) scheduling, which is part of the computer’s operating system. When a program runs, the computer’s operating system stores the program in its main memory and registers it in a “work queue,” a list of programs waiting to run. The operating system selects one program to run from the work queue, runs it on the CPU, and clears it from the work queue when it finishes running.

A CPU can only run one program at a time. So how do we make it look like two programs, A and B, are running at the same time? Programs are registered in the work queue in the order they are requested to run, and A and B are executed one after the other in this order. The longer A runs, the longer the “wait time” that B has to wait, so it doesn’t look like two programs are running at the same time. However, if you alternate between running A and B at regular time intervals, it will look like both programs are running at the same time.

To do this, we divide the CPU’s execution time into several short bins, with one program running in each bin. The execution of a program in a section is called “section execution,” and the time that a program is executed in each section is called “section time,” and the length of the section time is set to be constant. In principle, the interval execution of A and B is repeated alternately until both programs are finished, but if one program is finished first, the other program continues to run.

Meanwhile, while one program’s interval execution is in progress, the other program waits in the work queue. When A finishes executing its interval, it stops and selects a program to run during the next interval. The time required to prepare for the execution of B after A stops is called the replacement time, which is very short compared to the interval time. During the replacement time, the state of A is saved and the previous state of B is imported to run B. In addition, even if the same program is executed successively, the operating system must determine which program should be executed next, so a replacement time is required between interval executions.

Also, to avoid data loss that can occur when programs are swapped, the operating system periodically saves and recalls the state of each program. This is called “context switching,” and it requires the operating system to remember exactly where each program was last executed. This context switching, which can take a little longer than the replacement time, makes it appear as if the program continues uninterrupted.

The time it takes for a program to be registered in the work queue and terminate is called the “total processing time,” which is the time spent purely executing the program, plus the “replacement time” and the “wait time” for the work queue to wait for execution. When a program is executed with a total execution time that is longer than the interval time, the sum of the interval execution times will increase due to more interval executions. However, if the total execution time is less than or equal to the interval time, the program finishes within one interval time and the next program is executed immediately.

Now consider the case of programs A, B, and C running. If you run a new program C while A is running and B is waiting in the work queue, C will be registered after B, so C will run after the end of A and B’s interval execution. If A and B do not finish and need to run another interval, they are registered again after C in the workqueue, so they are in the state of C, A, and B. As a result, the three programs are executed repeatedly in the order they are registered.

As the number of programs registered in the work queue increases, the waiting time of each program increases proportionally. Therefore, it is necessary to limit the number of programs that can be registered in the work queue to prevent the waiting time from increasing beyond a certain level. To do this, operating systems sometimes use a technique called “priority scheduling”. Priority scheduling assigns each program a priority based on its importance, with the higher priority programs running first. This helps manage system resources more efficiently and prevents important tasks from being delayed.

In the end, CPU scheduling plays an important role in increasing the efficiency and performance of your computer system. It uses various scheduling algorithms to coordinate the execution order of programs and give users the experience of having multiple programs running at the same time. The role of the operating system is crucial in this process, and accurate and efficient scheduling is a key factor in the performance of the system as a whole.